Guest post in From Poverty to Power

There is a growing interest in safe-fail experimentation, failing fast and rapid real time feedback loops. This is part of the suite of recommendations that are emerging from the Doing Development Differently crowd as well as others. It also fits with the rhetoric of ministers in Australia and the UK responsible for aid budgets about closing down failing projects and programs quickly when they are deemed to not be working.

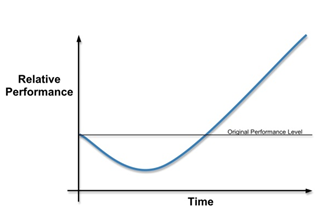

When it comes to complex setting there is a lot of merit in ‘crawling the design space’ and testing options, but I think there are also a number of concerns with this that should be getting more air time. Firstly as Michael Woolcock and others have noted it can simply take time for a program to generate positive tangible and measurable outcomes, and it maybe that on some measures a program that may ultimately be successful dips below the ‘its working’ curve on its way to that success. What some people call the ‘J curve’.

Furthermore and more importantly it ignores some key aspects of the complex adaptive systems in which programs are embedded. In the area of health policy Alan Shiell and colleagues have noted non-linear changes in complex systems are hard to measure in their early stages and simply aggregating individual observable changes fails to explore the emergent properties that those changes in combination can produce.

They note for example that in many areas of public health policy such as gun control that ‘multiple “advocacy episodes” may have no discernible impact on policy but then a tipping point is reached, a phase transition occurs, and new laws are introduced. In the search for cause and effect, the role played by advocates in creating the conditions for change is easily overlooked in favour of prominent and immediately prior events. To minimise the risk of premature evaluation and wrongful attribution, economists must become comfortable working with evidence of intermediate changes in either process or impact that act as preconditions for a phase transition’. Premature evaluation in cases like these could result in potentially successful programs being closed, or funding being stopped.

So how might programs be better able to measure and communicate the propensity of their programs to be able to seize opportunities (or critical junctures) if and when they might arise?

Firstly, in keeping with what a number of complexity theorists and some development practitioners have long suggested it is often changes in relationships that lie at the heart of the processes which lead to more substantive change. It is these changes in relational practice i.e. how people, organisations, states relate to each other that can eventually create new rules of the game, or institutions. However what those institutional forms will look like is an emergent property and is not predictable in advance. What is therefore required is information on changing relationships which can provide some reassurance that changes to the workings of the underlying system are occurring. An interesting example of this approach is provided by the What Works and Why (W3) project which applies systems thinking to understanding the role of peer-led programs in a public health response, and their influence in their community, policy and sector systems.

The second thing that needs to be explored are the feedback loops between the project and the system within which it is located. These are often ignored as monitoring and evaluation approaches tend to be of the ‘project-out’ variety i.e. they start with the ‘intervention’ and desperately seek directly attributable changes amongst the population groups they seek to benefit.

‘Context’ in approaches on the other handrecognise that the value of an activity or project can change over time in line with changes in the operating environment, and that multiple factors liable to have induced these changes. So for example a mass shooting at a school can radically change the value that a gun control program is perceived to have, which in turn can affect its ability to be successful. Enabling community organisations, local government departments, activists as well as other actors in the system to be part of this feedback is an important part of the picture. Using citizen generated data along the lines that organisations like Ushahidi promote can achieve this under the right circumstances and when politics is adequately factored in.

The third element which needs to be recognised is that simply adding up individual project outcomes such as changes in health, income or education is not going to capture emergent properties of the system as a whole. As has been noted in India the collective ‘social effect’ of establishing quotas for getting women into local government has important multiplier effects. Repeated exposure to female leaders “changed villagers’ beliefs on female leader effectiveness and reduced their association of women with domestic activities”. Outcomes such as these need to be measured at multiple levels in different ways appropriate to the task, and factor in context rather than seek to eliminate it.

Now of course people putting up money to support progressive social change need to have some indication – during the process – that what they are supporting can, or more realistically might, become a swan, or indeed a phoenix. I argue that the following are some of things they need to be concerned about a) what is the changing nature of relationships (captured for example through imaginative social network analysis), b) whether effective learning and feedback loops are in place, c) ensuring that the demands they place encourage multi-level analysis, learning and sense-making which avoids simplistic aggregation and adding up of results.

So I am all for the move to do iterative, adaptive and experimental development, but if we are serious about going beyond saying ‘context matters’ then exhortations to ‘fail fast’ need to be more thoroughly debated.

My thanks to colleagues Alan Shiell and Penny Hawe for comments on an earlier draft.

And in a similar vein, here’s Oxfam’s Kimberly Bowman with a great post on the new Real Geek blog